They still consider it a beta but there we go! It’s happening :D

I was able to contribute a script (

convert-llama-ggmlv3-to-gguf.py) to convert GGML models to GGUF so you can potentially still use your existing models. Ideally it should be used with the metadata from the original model since converting vocab from GGML to GGUF without that is imperfect. (By metadata I mean stuff like the HuggingFaceconfig.json,tokenizer.model, etc.)And now TheBloke is taking time off to convert all of his nearly 800 converted models?

Sorry, I’m trying to get in the loop on this stuff, what’s the significance of this, and who will it effect?

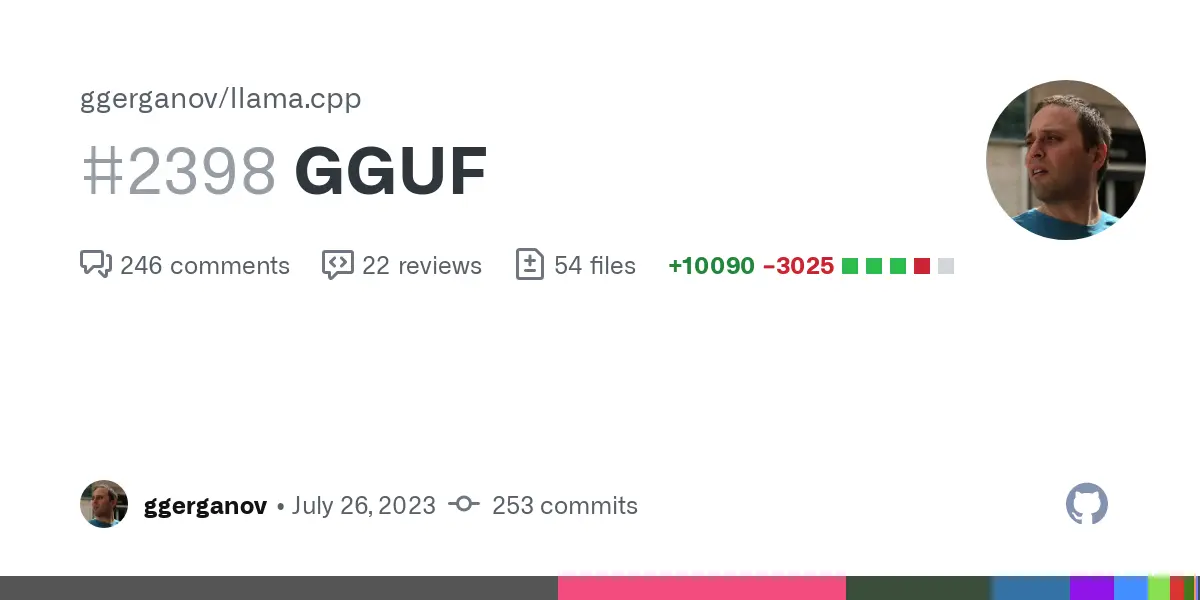

The significance is we have a new file format standard, bad news is it breaks compatibility with the old format so you’ll have to update to use newer quants and you can’t use your old ones

The good news is this is the last time that’ll happen (it’s happened a few times so far) as this one is meant to be a lot more extensible and flexible, storing a ton of extra metadata for extra compatibility

The great news is that this paves the way for better model support as we’ve seen already with support for falcon being merged: https://github.com/ggerganov/llama.cpp/commit/cf658adc832badaaa2ca119fe86070e5a830f8f6